Today, giants from the world of IT companies have become leaders in the use of artificial intelligence and machine learning. For Apple, the focus of research and development is focused on improving Siri, a virtual assistant, as well as machine translation, object recognition in photos, and recommendation algorithms for streaming services.

Litslink has a great article to find out more about machine learning algorithms to manipulate data in certain ways and become a leading company in the technology sector. What is more, according to a recent report from PwC, artificial intelligence and machine learning are expected to add $ 15.7 trillion to global GDP by 2030.

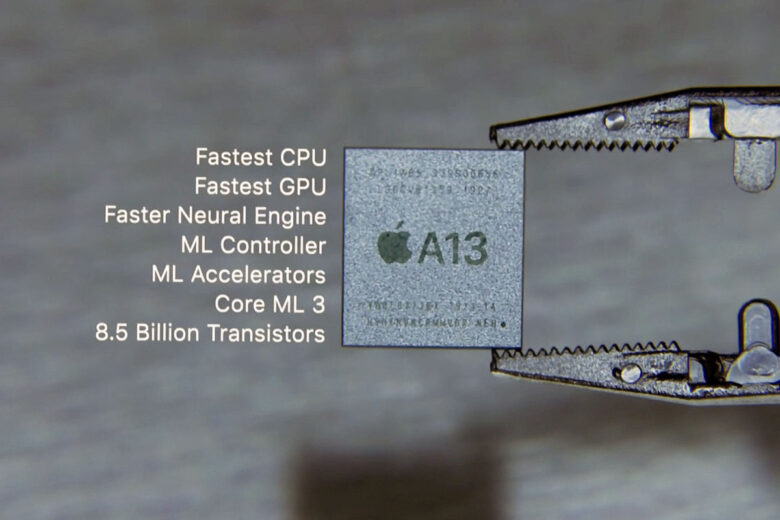

ML in Apple A13 Bionic Chipset

Source: macworld.com

At the heart of the iPhone is a chipset that has been unrivaled in performance for years. The updated Apple A13 Bionic only strengthened Apple’s leadership in speed and performance over the ubiquitous Qualcomm Snapdragon 855, installed on most Android smartphones. Apple claims the A13 is the best machine learning platform ever used on smartphones, thanks to several improvements. The main one is introducing new dedicated accelerators focused on the most common machine learning tasks (matrix multiplication). Overall, a single processor has a throughput of up to 1 trillion operations per second, six times faster than its counterparts. Among other things, the A13 is equipped with a controller that links the work of machine intelligence with the CPU, GPU, neural center, making it a fully integrated platform with balanced efficiency and performance.

AI and ML in the Voice Assistant Siri

Source: 9to5google.com

Apple plans to use AI technology to improve and expand the functionality of the voice assistant Siri. A few years ago, Apple’s voice assistant evolved from a voice recognition system to a neural network-based system. It uses machine learning and deep neural networks. Users didn’t notice, but Siri started learning every time the assistant failed to understand the user’s desire.

Machine learning now consists not only of prompt prompts while using applications, but also working in real-time. For example, you can tell Siri several things in a row: “Turn on navigation, order coffee at work, and turn on the music.”

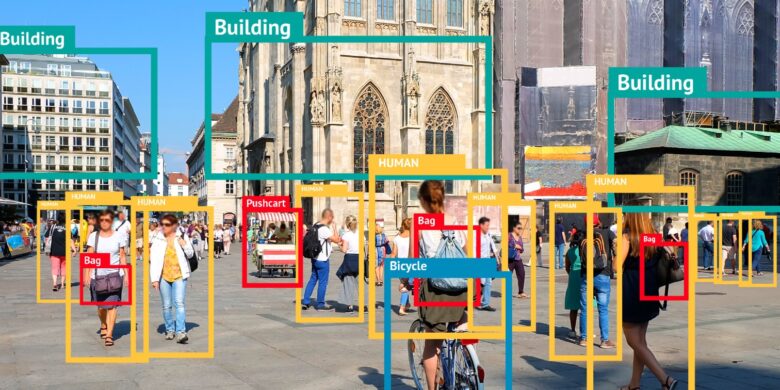

AI in Recognition of Objects

Source: bitmovin.com

Apple has created an algorithm to improve AI recognition of objects in computer-generated images. Synthetic images are often used to quickly train neural networks due to a lack of material. This leads to the fact that AI, trained on computer-generated images, does not cope well with its task and often misidentifies objects in real images. To solve this problem, Apple researchers have developed a method that can enhance synthetic images. Better training datasets are created by using simultaneously generated and real images.

The algorithm’s efficiency was tested by comparing the accuracy of object detection between two neural networks trained on conventional and enhanced images. As a result, the second neural network coped with the definition of objects in real frames by 22 percent better than usual.

AI and ML in Iphone’s Camera

Source: techradar.com

Several years ago, engineers at Apple thought that the iPhone’s camera could be made smarter by using powerful new machine learning algorithms known as neural networks. The iPhone X added a new portrait mode that can adjust the lighting on people’s faces and skillfully blur backgrounds. All this due to a new module added to the iPhone’s main processor – a neural engine sharpened for machine learning.

It is the hardware used for computational photography. Apple uses this technology to control the portrait mode of its dual-camera phones. IPhone’s DSP uses machine learning techniques to recognize people with one camera while the second camera adjusts the depth of field, blurs the background, and highlights the subject. The ability to recognize people using machine learning is not new. This is essentially the same as categorizing images, but faster and in real-time.

Besides, the iPhone’s photo recognition system automatically sorts images of similar people into a separate folder. Such an application uses the human face analysis API and organizes the photos according to the processed data. When the user removes an error made by the program, it automatically makes corrections and thus learns.

ML in App Development Technologies

Source: arhamsoft.com

Core ML is Apple’s App Learning Framework that helps developers run artificial intelligence tools easily on mobile devices. Core ML 3 now supports acceleration with new, advanced real-time machine learning models. Core ML supports over 100 model layers so that applications can leverage high-quality, previously unavailable models for deep analysis of vision, natural language, and speech.

Besides, for the first time, developers can update MO models on a device using model personalization. Advanced technology enables personalized features without compromising user privacy. And with the dedicated Create ML application to improve ML, developers can build learning models without having to write code. Training of several models on various data sets became available for new types of models: object recognition, actions, and sound classification.

AI and ML in the Analysis of Users’ Behavior

Source: medium.com

Artificial intelligence will not only make possible the emergence of new features in Apple applications and services but will also help developers create software and analyze user audiences.

For more than a decade, marketers have been trying to solve the same problem – how to find out the audience’s preferences and needs to offer them the desired product? In the era of globalization and accelerated progress, they have effective tools based on neural networks in their arsenal.

Apple uses machine learning technologies for both personalization and creation of unique content, streamlining workflows, and distribution. In other words, AI is taking on more and more of the tasks that marketers have traditionally performed.

AI and ML in Apple Watch

Source: venturebeat.com

Apple Watch continuously analyzes your movements and heartbeat in various situations and loads. Such programs can already predict the exact time of a person’s awakening based on the collected data. In the future, such applications will even be able to predict a stroke or heart attack, which will undoubtedly save many lives. AI discovers structures and patterns in data that allow an algorithm to learn a certain skill: the algorithm becomes a classifier or predictor. At the same time, the models adapt as new data becomes available.

Machine learning and artificial intelligence are important technologies to Apple’s future as they are fundamentally changing the way people interact with devices. Apple is advancing and integrating machine learning into Apple products to help customers live better lives and deliver more personal, intelligent, and natural interactions.